Artificial Intelligence can give organizations a competitive edge if it’s designed and managed responsibly. Unchecked AI can pose significant risks, including bias, privacy violations, security loopholes, and system failures. These risks erode trust and can trigger regulatory or legal consequences.

But how do forward-thinking companies avoid these pitfalls while still accelerating AI-driven growth? The answer lies in structured governance.

The urgency for robust AI risk management is evident as AI adoption continues to surge. For instance, over 75% of organizations now report using AI in at least one business function. Organizations need robust AI risk management. This is especially critical for industries like eCommerce, healthcare, and financial services, where AI touches sensitive customer data and impacts regulated workflows. The National Institute of Standards and Technology (NIST) introduced the AI Risk Management Framework (AI RMF) as a voluntary playbook to help companies systematically identify and mitigate AI risks across the entire AI lifecycle.

This guide is designed for CTOs, product leaders, and compliance professionals at mid-sized enterprises who are looking to scale AI initiatives responsibly. If you’re deploying generative AI for eCommerce, automating decision workflows, or launching customer-facing AI features, this blog will show you how to use the NIST AI RMF to ensure compliance, trust, and long-term scalability. Following the AI RMF helps ensure AI is a force for positive change, not a liability. It embeds trust, transparency, and accountability into AI development and deployment.

What is the NIST AI RMF and Why Does It Matter for Enterprise AI Risk Management?

The NIST AI Risk Management Framework (AI RMF) offers a structured and repeatable approach to AI risk management. It isn’t a regulation; it’s a set of best practices that organizations can voluntarily adopt to manage AI risks responsibly.

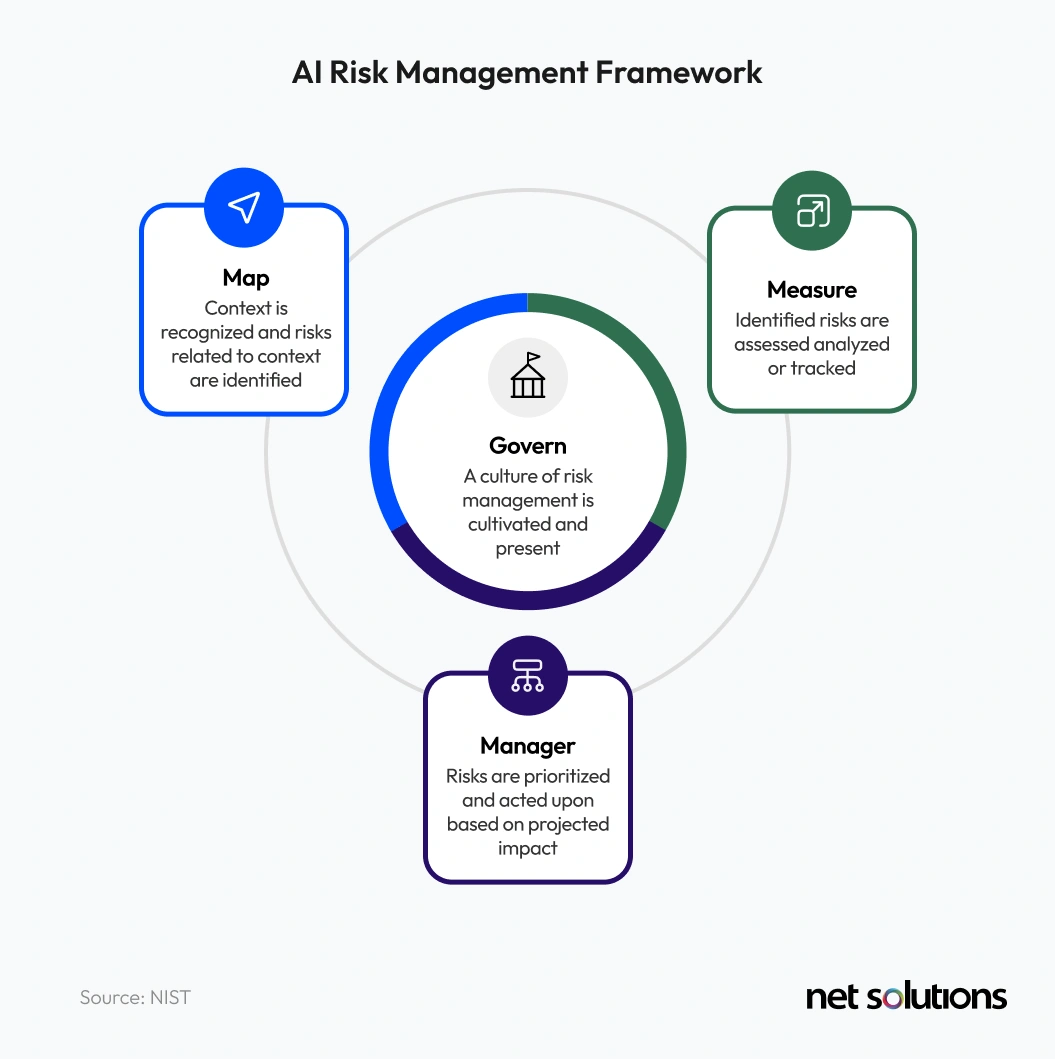

The framework is built around four core functions:

| Function | Purpose |

|---|---|

| Govern | Establish oversight, accountability, and policies for AI risk. |

| Map | Understand the AI system’s context, intended use, and risks. |

| Measure | Track AI performance and risk using metrics and tests. |

| Manage | Respond to AI risks with controls, incident handling, and continuous updates. |

Adopting this framework helps companies:

- Align AI development with laws (privacy, fairness, etc.)

- Maintain ongoing oversight and documentation.

- Build stakeholder trust—from customers to regulators

- Stay ahead of future regulations

And most importantly, it helps transform your AI initiative from a high-risk experiment into a scalable, compliant, and strategic differentiator.

Despite the clear benefits, organizations face challenges. Concerns about data accuracy or bias (45%) and the lack of proprietary data to customize models (42%) are significant hurdles to AI adoption. For CTOs, product leaders, and compliance teams, the AI RMF provides a clear roadmap to govern AI responsibly, much like following cybersecurity standards today.

While the AI RMF outlines what to do, implementation requires actual tools, processes, and workflows. There’s a growing market of SaaS compliance tools—such as Drata, Vanta, and Secureframe—that help automate governance, track evidence, and monitor controls. These platforms don’t replace governance efforts, but they streamline the how, making AI RMF adoption more efficient and auditable.

In parallel, infrastructure providers like AWS, utilizing technologies such as Amazon Bedrock, have enabled the scalable, secure, and governable adoption of AI for enterprise needs. By integrating foundational models with guardrails and monitoring capabilities, businesses can achieve faster go-to-market with managed risk.

How to Implement AI Risk Management Using the NIST Framework

For enterprises looking to operationalize AI responsibly, applying the NIST AI Risk Management Framework isn’t just a compliance task, it’s a business necessity. Whether the goal is to enhance customer experience, drive internal efficiency, or roll out AI-powered features, unmanaged risks can quickly escalate into reputational, legal, or financial setbacks.

Here’s a practical, business-aligned breakdown of how enterprises can implement the AI RMF across four stages—govern, map, measure, and manage—to build scalable, trustworthy AI systems.

1. Govern: Establish Oversight and Define Guardrails Early

Most enterprises struggle with ownership and accountability of AI. Without a clear governance model, initiatives lack direction, and compliance becomes a reactive response to problems.

Key Steps:

- Form a cross-functional governance committee (e.g., tech, compliance, and business leads) to own policies and decisions.

- Define acceptable AI use cases, prohibited applications, and review cycles.

- Conduct recurring training programs to align product and engineering teams with AI ethics, bias mitigation, and privacy regulations.

- Deploy compliance automation tools (e.g., Drata, Secureframe) to track controls and ensure audit readiness.

For customer-facing AI systems, whether chat assistants, personalized product recommendations, or site search, governance is not optional. It’s the foundation for building systems that customers can trust, regulators can audit, and businesses can scale confidently.

This is especially evident in AI-powered search and discovery experiences. When organizations implement solutions like custom AWS search for eCommerce, they face similar governance challenges:

- Ensuring indexing and retrieval mechanisms are fair and unbiased

- Maintaining privacy-aware ranking models

- Avoiding over-personalization that may harm discoverability or compliance

By aligning these search systems with the principles of the NIST AI RMF, particularly in the Govern and Map stages, enterprises can introduce sophisticated AI capabilities without compromising on transparency, accountability, or legal defensibility.

In both cases, structured governance helps ensure that innovation moves hand in hand with risk management, transforming AI from a technical project into a trusted business asset.

2. Map: Understand Context, Dependencies, and Risk Exposure

A common oversight in AI projects is the lack of contextual clarity—what the AI system does, who it affects, and what risks it introduces.

Key Steps:

- Run structured mapping workshops to define AI purpose, stakeholder impact, and data flow.

- Create an AI use case inventory to classify systems by risk levels (e.g., high, medium, low).

- Assess all third-party components— pretrained models, APIs, data providers—for compliance and security posture.

In regulated sectors such as healthcare and financial services, and high-volume environments like eCommerce, risk mapping is crucial. It prevents downstream surprises and aligns procurement with compliance. It prevents downstream surprises and aligns procurement with compliance.

3. Measure: Define Metrics and Monitor in Real Time

Without reliable metrics, it’s nearly impossible to tell whether your AI is functioning as intended, or if it’s causing harm.

Key Steps:

- Set business-aligned metrics: accuracy, bias, latency, uptime, explainability.

- Instrument systems using observability tools like AWS CloudWatch or DataDog for real-time visibility.

- Log inputs and outputs to enable traceability and audits.

- Conduct adversarial and scenario testing to simulate failure cases and measure fairness.

Measurement becomes especially critical in environments where AI directly shapes customer experiences—such as in eCommerce product discovery. In these scenarios, generative AI models are used to drive personalized recommendations, dynamic search results, and guided shopping experiences. But without continuous oversight, these systems can drift—serving irrelevant, biased, or even misleading content.

Maintaining customer trust relies on tracking not just performance, but also fairness, diversity, and explainability of recommendations. The “Measure” function of the NIST AI RMF becomes indispensable here, enabling organizations to:

- Define business-aligned metrics (e.g., relevance, conversion lift, exposure fairness)

- Monitor real-time outputs and system health

- Run scenario tests to detect bias or systemic failures before they impact CX

Continuous measurement transforms AI from a black box into a business-aligned, feedback-driven engine—especially in fast-moving digital commerce environments.

4. Manage: Prepare for Incidents and Scale Responsibly

AI risk doesn’t end at deployment. Enterprises must prepare for drift, failures, and evolving compliance obligations.

Key Steps:

- Define risk tolerance per use case, especially for customer-facing or operationally critical systems.

- Create incident response playbooks that outline handling for model failure, security anomalies, or bias reports.

- Use automation tools to detect control gaps, generate reports, and escalate risks.

- Schedule regular audits and updates to keep documentation, roles, and procedures current.

This approach becomes mission-critical in environments such as eCommerce, healthcare, and financial services, where AI is embedded in core customer journeys, clinical workflows, or financial decision-making. The ability to manage incidents in real-time ensures that AI doesn’t just scale; it stays aligned with business objectives, regulatory standards, and user trust. Managing AI responsibly isn’t just a safeguard—it’s a growth enabler.

Case Study: How a Mid-Sized Enterprise Operationalized AI Governance in 6 Weeks Using the NIST AI RMF

To demonstrate how the NIST AI Risk Management Framework works in practice, here’s how Net Solutions helped a mid-sized client. A North American commercial cleaning provider engaged us to implement an AI-powered text and speech chat assistant for their sales team. The business goal was clear: enable quick, consistent access to verified product information, without exposing the organization to undue risk. To ensure the AI system was trustworthy, compliant, and scalable, we applied the NIST AI Risk Management Framework (AI RMF) across policy, process, and technology.

Translating the Framework into Action

Here’s how we converted the conceptualised framework into real action in ground reality:

1. Established AI Governance Structures

- Formed an AI Governance Committee with CTO, Compliance Lead, and Client Managers

- Defined decision-making roles and instituted a monthly review process

- Documented acceptable and prohibited AI use cases, reviewed quarterly

2. Automated Compliance Tracking

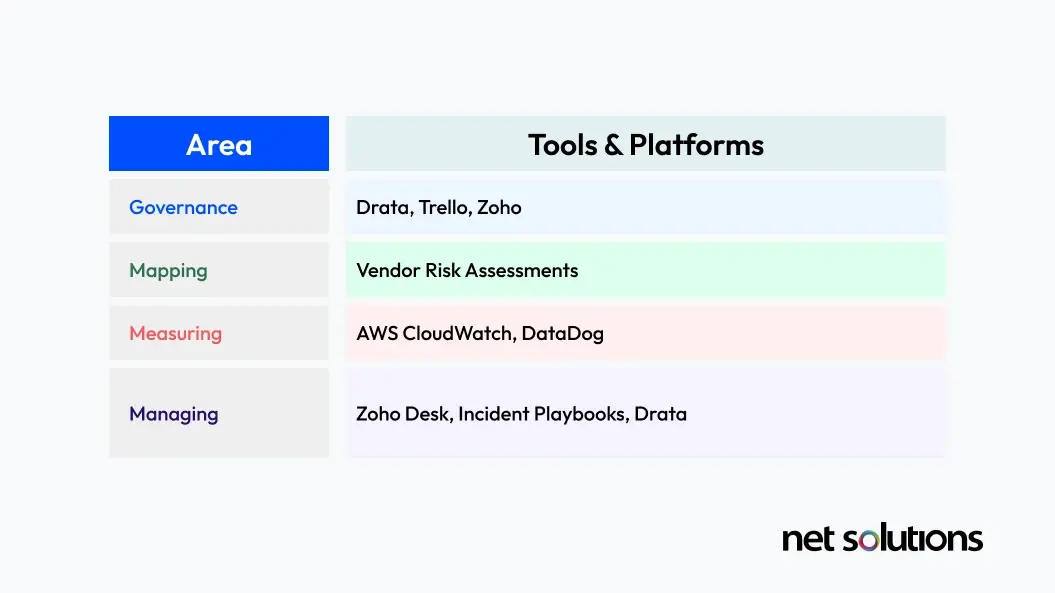

- Implemented Drata to map AI RMF controls and automate evidence tracking

- Set up bi-weekly compliance checks through real-time dashboards

3. Enabled Team Awareness & Readiness

- Conducted training sessions on AI ethics, bias, privacy, and relevant labor laws

4. Mapped Use Case Context and Risk

- Ran workshops to define AI purpose, stakeholder impact, and business implications

- Created a use case inventory with risk categorization (low/medium/high)

- Evaluated third-party APIs (OpenAI, AWS) and stored SOC2/GDPR reports

5. Instrumented Real-Time Monitoring

- Defined metrics for accuracy, bias, latency, and system uptime

- Used AWS CloudWatch and DataDog for monitoring and alerting

- Logged AI inputs and outputs for traceability and audits

6. Conducted Resilience & Fairness Testing

- Simulated adversarial and bias scenarios across varied user personas

- Established pipelines to monitor performance deviations

- Integrated Zoho Desk to trigger alerts for immediate triage

7. Implemented Risk Controls and Incident Management

- Set risk tolerance thresholds and human-in-the-loop requirements for critical queries

- Developed AI incident response playbooks and escalation workflows

- Logged all changes and reviews in Confluence and Drata; conducted semi-annual system audits

Tools & Technology Stack Used

The result was a business-aligned, compliant AI solution that improved sales responsiveness without compromising on governance, ethics, or reliability.

Start Your AI RMF Journey Today

Building AI you can trust requires more than intent—it requires structured implementation. As a custom AI development company, we partner with mid-sized organizations to help them align with the NIST AI RMF, using a combination of:

- Technical governance practices

- Automated compliance platforms (like Drata)

- Real-time monitoring and incident management

- Industry-specific AI integration services

And here’s the best part: You don’t need to start from scratch. This focus on structured implementation is crucial, especially when considering that only 26% of companies have developed the necessary capabilities to move beyond proofs of concept and generate tangible value from AI.

As AI continues to transform core business functions, from customer engagement to product discovery, managing risk is no longer optional. The NIST AI Risk Management Framework provides a clear and structured approach to identifying, assessing, and mitigating AI-related risks throughout the entire development lifecycle.

Aligning with this framework isn’t just about compliance; it’s about building AI systems that are transparent, auditable, and scalable. Whether you’re deploying generative AI for eCommerce or automating internal decision-making workflows, embedding risk management practices early ensures that innovation doesn’t come at the expense of trust, safety, or long-term sustainability.

By combining governance, measurement, and incident response with the right tools and processes, businesses can move beyond experimentation and start extracting real value from AI—confidently and responsibly.

Ready to Operationalize AI with Confidence?

Let Net Solutions help you implement the NIST AI Risk Management Framework using:

- Governance best practices

- Drata, AWS Bedrock, and real-time monitoring

- Custom AI feature integration for your business use case

We respect your privacy. Your information is safe.

FAQs

1. Is the NIST AI RMF mandatory?

No, it’s a voluntary framework—but it’s widely adopted as a best practice, especially in regulated or customer-facing industries.

2. How long does implementation take?

Most mid-sized organizations can begin executing the core AI RMF pillars within 4–6 weeks with the right support and tools.

3. Can we use the AI RMF if we rely on third-party models like OpenAI or AWS Bedrock?

Absolutely. In fact, mapping and measuring risks from external models is a core part of the framework’s design.