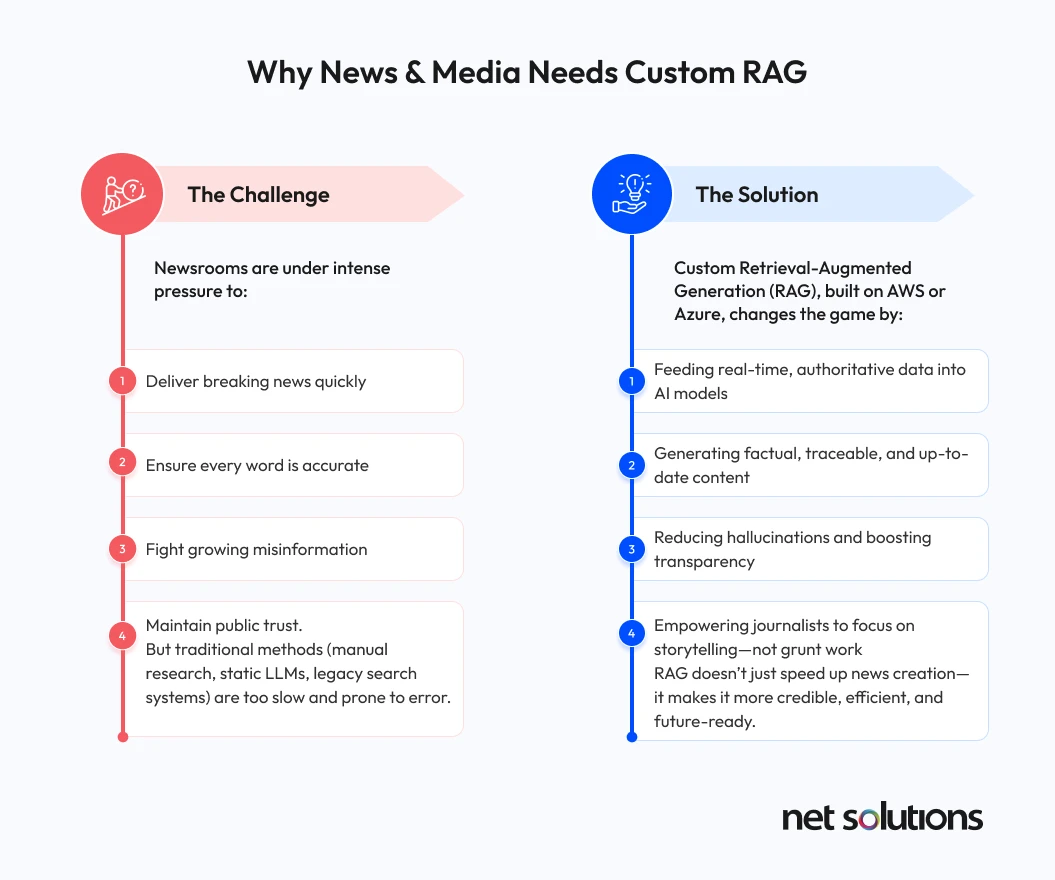

The news industry is wrestling with information overload, constant demands for updates, and widespread misinformation, all while trying to maintain public trust. Generative AI offers powerful content creation tools, but its tendency to “hallucinate” or rely on outdated data poses a risk where accuracy is paramount.

Retrieval-Augmented Generation (RAG) offers a solution. It lets AI access and integrate current, authoritative external information before generating responses. This analysis explores how custom RAG, especially on platforms like AWS and Azure, can revolutionize news operations.

Adopting custom RAG can dramatically boost accuracy, provide real-time context, and improve efficiency, ultimately strengthening journalistic integrity and rebuilding reader trust. In an era of misinformation, RAG isn’t just an efficiency tool; it’s a strategic necessity for combating inaccuracies and reestablishing trust in journalism.

Understanding Retrieval-Augmented Generation (RAG): The Core Mechanics

Retrieval-augmented generation (RAG) is an advanced AI framework designed to optimize the output of large language models (LLMs) by enabling them to reference an external authoritative knowledge base beyond their original training data sources before formulating a response. This approach fundamentally differs from traditional LLMs, which are limited to the static knowledge embedded during their pre-training phase. RAG introduces a dynamic information retrieval component, enabling LLMs to produce outputs highly tailored to specific domains or contexts, thereby significantly enhancing relevance, accuracy, and overall efficiency without the prohibitive computational and financial costs associated with continuously retraining an entire model. In various ways, it can also power product discovery.

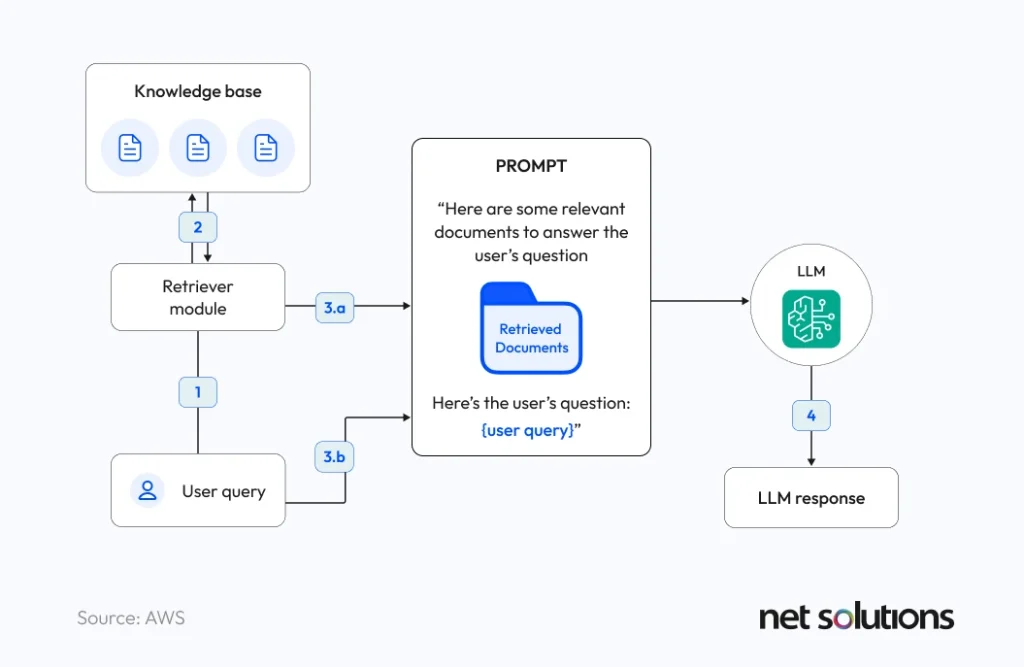

This is how AWS defines the anatomy of RAG:

RAG is an efficient way to provide an FM with additional knowledge by using external data sources and is depicted in the following diagram:

- Retrieval: Based on a user’s question (1), relevant information is retrieved from a knowledge base (2) (for example, an OpenSearch index).

- Augmentation: The retrieved information is added to the FM prompt (3.a) to augment its knowledge, along with the user query (3.b).

- Generation: The FM generates an answer (4) by using the information provided in the prompt.

Here’s a general diagram of an RAG workflow. From left to right are the retrieval, the augmentation, and the generation. In practice, the knowledge base is often a vector store.

Why News & Media Needs Custom RAG

The news and media industry operates at the critical nexus of speed, accuracy, and trust. Custom RAG solutions offer a profound strategic advantage by directly addressing these core demands, enabling organizations to navigate the challenges of the digital age with greater efficacy and credibility.

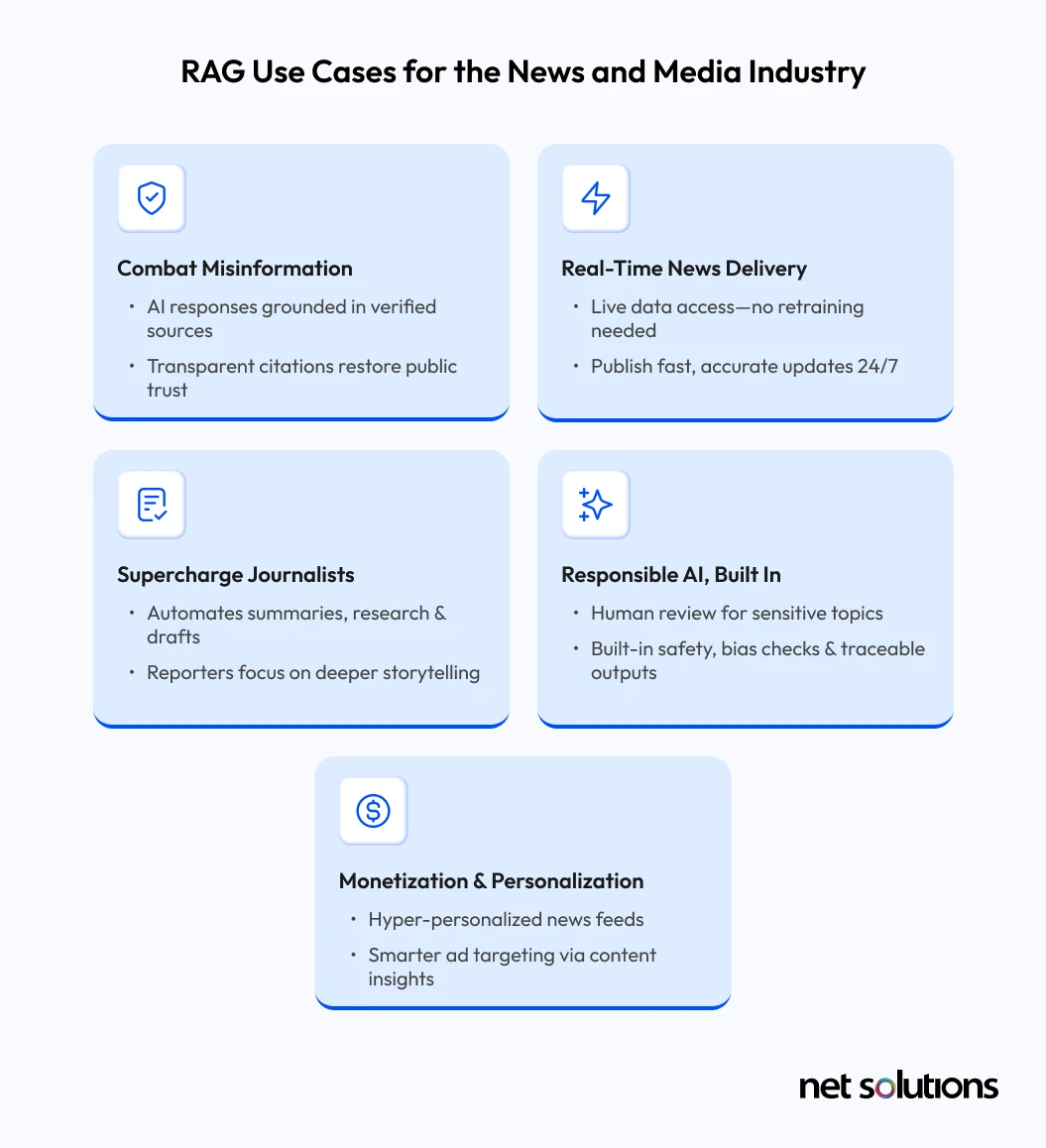

1. Enhancing Factual Accuracy and Combating Misinformation

News organizations face intense pressure to deliver accurate and trustworthy information. Retrieval-Augmented Generation (RAG) presents a powerful solution that addresses key challenges in AI-driven content creation, ultimately helping to rebuild public trust.

- Grounding AI Outputs in Verifiable Data: RAG ensures that AI-generated content is firmly rooted in facts. It does this by pulling relevant information from internal archives, verified databases, or real-time news feeds. For an industry where spreading unverified information can severely damage reputation and erode trust, this “grounding” mechanism is essential.

- Mitigating AI Hallucinations: One of the biggest concerns with large language models (LLMs) is their tendency to “hallucinate,” producing seemingly plausible but incorrect information. RAG significantly reduces this risk by basing the generated output on actual, retrieved content, boosting factual reliability. For instance, tools like Microsoft’s Azure AI Content Safety are designed to detect and flag potential hallucinations by cross-referencing AI-generated statements with source documents.

- Enabling Source Attribution and Traceability: A core benefit of RAG is its ability to provide clear citations, directly linking generated responses back to their specific source documents. This transparent audit trail is invaluable for journalistic integrity, allowing both internal teams and external users to verify the accuracy of the information. This capability fosters transparency and builds credibility with the audience.

By reducing hallucinations, providing explicit source attribution, and generally enhancing factual accuracy, RAG directly tackles the reasons behind declining public trust in news. In an environment rife with misinformation, the ability for readers to easily see the origin of AI-generated information builds confidence. This empowers “data-driven journalism that resonates with audiences seeking accurate and insightful reporting,” fostering transparency and credibility. This isn’t just a technical feature; it’s a strategic necessity for news organizations to stand out as reliable sources in a crowded and often untrustworthy information landscape, ultimately rebuilding their most valuable asset: public trust.

2. Delivering Real-Time Context and Accelerating Breaking News

In the era of 24/7 news cycles, speed alone is no longer enough—accuracy, context, and personalization are equally critical. Journalists must balance the need to break stories first with the responsibility to report verified information. Traditional tools often fail to meet this demand. This is where RAG fundamentally changes the equation. By integrating real-time data into the generative workflow, RAG enables newsrooms to respond instantly to unfolding events while ensuring that content is grounded rather than speculative.

- Accessing Dynamic, Up-to-the-Minute Information: RAG models possess the crucial ability to tap into dynamic, constantly updated internal and external data sources. This enables them to access and utilize the most up-to-date information without requiring extensive model retraining. This capability is indispensable for news organizations that must remain at the forefront of breaking stories and provide timely, accurate updates as events unfold.

- Streamlining Content Generation for Rapid Reporting: RAG significantly accelerates the time-to-market for breaking news. Journalists can leverage RAG to swiftly access real-time data streams and historical background information, enabling them to produce factually consistent content with remarkable efficiency. This enhancement in research capabilities directly translates into quicker turnaround times for news stories without compromising on accuracy or depth.

- Enabling Personalized News Delivery: RAG can profoundly enhance recommendation systems by retrieving user-specific information and generating highly personalized suggestions. For the news industry, this translates into hyper-personalization, allowing outlets to deliver tailored content based on individual reader preferences and browsing history, thereby significantly enhancing user engagement and retention.

Custom RAG is more than an AI enhancement—it’s a strategic capability that blends journalistic values with cutting-edge automation. It delivers speed, transparency, and accuracy at scale, empowering newsrooms to operate with greater integrity and agility. For organizations seeking to remain trusted and competitive in the digital age, adopting RAG isn’t just forward-thinking—it’s essential.

3. Boosting Operational Efficiency and Journalist Productivity

The newsroom of today operates under mounting pressure—not just to publish quickly, but to maintain depth, accuracy, and volume across multiple channels. Journalists are stretched thin between deadlines and the increasing demand for multi-format content. RAG helps rebalance this equation by automating the most repetitive, time-consuming backend tasks. From summarizing lengthy reports to generating social posts in seconds, RAG allows journalists to focus on what truly differentiates their work: insight, analysis, and storytelling.

- Automating Content Summarization, Research, and Data Extraction: RAG is highly effective in assisting with the generation of high-quality content summaries from articles, reports, or blog posts by efficiently retrieving relevant information. It automates labor-intensive tasks such as research and fact-checking, thereby freeing journalists to concentrate on crafting compelling narratives and deeper analyses. For example, Amazon Bedrock Data Automation can process vast amounts of unstructured data, including video content, subtitles, and audio, to understand scene context, dialogue, and mood, automatically extracting and structuring this information for use.

- Streamlining Editorial Workflows: The integration of RAG into content creation workflows can significantly assist journalists in drafting articles and reports. Furthermore, it can aid in processing large volumes of documents, extracting critical data, and performing summarization and classification for backend optimization, enhancing overall operational fluidity.

- Accelerating Content Creation: AI-powered interview bots, leveraging RAG, have demonstrated the capability to produce excellent first drafts of blog posts, dramatically reducing the time per story and lowering production costs compared to human-written content. This efficiency extends to generating tailored social media prompts that adhere to a company’s brand standards, ensuring consistent messaging across platforms.

By automating research, fact-checking, summarization, and content drafting, RAG doesn’t replace journalists—it enhances them. It eliminates time-consuming tasks, allowing writers to focus on storytelling, investigation, and analysis. The result: higher-quality content, faster output, and better resource utilization across the newsroom.

4. Building Reader Trust and Upholding Journalistic Integrity

The newsroom of today operates under mounting pressure—not just to publish quickly, but to maintain depth, accuracy, and volume across multiple channels. Journalists are stretched thin between deadlines and the increasing demand for multi-format content. RAG helps rebalance this equation by automating the most repetitive, time-consuming backend tasks. From summarizing lengthy reports to generating social posts in seconds, RAG allows journalists to focus on what truly differentiates their work: insight, analysis, and storytelling.

- Transparency Through Explicit Citations: A critical feature of RAG implementations is their ability to offer transparent source attribution, explicitly citing references for retrieved information. This crucial functionality allows users to cross-reference the content, thereby significantly enhancing credibility and fostering trust in the information provided.

- Addressing Ethical Considerations: The successful implementation of RAG necessitates careful attention to a range of ethical considerations, including data privacy, information authenticity, and broader societal impact. Azure AI Content Safety, for instance, provides mechanisms to scan AI-generated content for protected material, actively preventing copyright infringements and ensuring content originality. Furthermore, the application of bias mitigation techniques during both model training and deployment is imperative for maintaining objectivity in reporting.

- The Human-in-the-Loop: While RAG automates numerous processes, human judgment retains a pivotal role. A human review process for final summaries or any generated content before publication is essential to ensure accuracy, prevent the dissemination of misinformation, and navigate complex ethical dilemmas. In this symbiotic relationship, AI functions as a powerful assistant, not a replacement, ensuring that content consistently aligns with established journalistic standards, societal norms, and regulatory requirements.

Inaccurate or generic content erodes reader loyalty. AI can maintain a brand’s voice during digital shifts. By automating research, fact-checking, summarization, and content drafting, RAG doesn’t replace journalists—it enhances them. It eliminates time-consuming tasks, allowing writers to focus on storytelling, investigation, and analysis. The result is: higher-quality content, faster output, and better resource utilization across the newsroom.

5. Achieving Competitive Advantage and Optimizing Costs

In an industry where margins are tightening and the pace of content consumption is accelerating, operational efficiency isn’t just a luxury—it’s a competitive necessity. RAG helps media organizations do more with less: reducing production costs, speeding up delivery, and even creating new monetization opportunities. By leveraging AI intelligently, newsrooms can maintain relevance, outperform larger competitors, and future-proof their business models.

AI reduces repetitive workload while maintaining depth and nuance, allowing both retailers and media companies to scale intelligent automation without sacrificing quality. It has been instrumental in streamlining operations and lowering costs in retail settings. These same cost-saving and operational benefits can be applied to media organizations that leverage AI-powered architectures like RAG.

- Faster Time-to-Market: By streamlining the entire process of research, fact-checking, and content generation, RAG empowers news agencies to produce factually consistent content with enhanced efficiency. This directly translates into significantly quicker turnaround times for breaking news stories, enabling organizations to be first to report with accuracy.

- Reducing Computational and Financial Overhead: RAG offers a distinctly cost-effective approach when compared to the continuous and expensive retraining of LLMs with new data. Its serverless infrastructure is designed to dynamically scale with demand, thereby reducing infrastructure management costs and optimizing operational expenditures.

- Unlocking New Revenue Streams: For media companies, RAG introduces innovative opportunities for revenue generation. It can enable intelligent contextual ad placement by processing multimodal content such as video, subtitles, and audio to accurately understand scene context, dialogue, and mood. This allows the precise matching of advertisements with relevant content moments. Such intelligent matching leads to more relevant advertising, enhancing ad performance and potential revenue.

RAG gives news organizations a strategic edge—lowering costs, accelerating content delivery, and enabling smarter monetization. It levels the playing field for smaller players while empowering all media brands to innovate, grow audiences, and remain financially sustainable in an increasingly competitive landscape.

The following image sums up why the news and media industry benefits from custom RAG:

Building Custom RAG Solutions with AWS

Both AWS and Azure provide robust, scalable, and comprehensive service ecosystems that are ideally suited for building custom RAG solutions for the news and media industry. These platforms provide the necessary components for each stage of the RAG pipeline, including data ingestion, indexing, retrieval, and seamless integration with large language models. AWS offers a rich suite of services that can be orchestrated to create highly customized RAG architectures, providing flexibility and control over every component

1. Data Ingestion and Preparation

The foundation of any effective RAG system is well-prepared data. AWS provides versatile storage and processing services for this critical initial phase.

- Amazon S3 (Simple Storage Service): News organizations can store a wide range of content formats, including documents, PDFs, proprietary reports, text files, images, audio, and video. For instance, historical news archives, real-time news feeds, multimedia assets, and internal style guides can all be securely housed in S3 buckets.

- Amazon Bedrock Data Automation: This service plays a crucial role in processing large volumes of unstructured and multimodal data. It automatically extracts and structures information from various content types, such as video content, subtitles, and audio, to understand scene context, dialogue, and mood. This automated extraction is vital for transforming raw media assets into a format suitable for RAG.

- Preprocessing and Chunking: Before indexing, documents need preprocessing, which often involves tokenization and conversion into embeddings. Large documents are typically divided into smaller, more manageable chunks to optimize retrieval and fit within the context windows of LLMs. Amazon Bedrock Knowledge Bases offers various chunking strategies, including fixed-size, hierarchical, and semantic chunking, allowing for fine-grained control over how content is prepared. For example, a Ray cluster can be used to convert large datasets into embeddings, optimizing performance for large-scale ML workloads.

2. Indexing and Knowledge Base Creation

Once the data is prepared, it needs to be indexed to enable efficient retrieval. AWS provides specialized services for creating and managing these knowledge bases.

- Amazon Bedrock Knowledge Bases: This is a fully managed RAG capability within Amazon Bedrock that automates the entire RAG workflow, from ingestion to retrieval and prompt augmentation. It simplifies the creation of a knowledge base, which acts as the repository for embedding and retrieval.

- Amazon OpenSearch Service: A powerful alternative to standalone vector databases, OpenSearch Service provides dual search capabilities, combining traditional syntactic search (keyword-based) with advanced semantic search (via dense embeddings). It can ingest documents from various sources, and the embeddings and text chunks are indexed for hybrid search.

3. Retrieval and LLM Integration

The core of RAG involves retrieving relevant information and augmenting the LLM’s prompt.

- Amazon Bedrock: This fully managed, serverless platform offers access to a wide choice of high-performing foundation models (FMs) from leading AI companies, including Amazon’s own Titan family of models.

- RetrieveAndGenerate API: Within Amazon Bedrock, the RetrieveAndGenerate API plays a central role in the RAG workflow. It first retrieves relevant data from the configured knowledge base and then feeds this context into the chosen foundation model to generate accurate, context-aware responses.

Architecture Examples and Use Cases for the News and Media Industry

AWS supports various architectural patterns for custom RAG, tailored to different news media needs:

- News Summarization: A news summarizer tool can leverage Amazon Bedrock’s language models to fetch and condense news articles from multiple RSS feeds and APIs (e.g., GNews, NewsAPI), generating concise summaries of the latest news.

- Media Analytics: VideoAmp, a media measurement company, utilized Amazon Bedrock to develop a Natural Language Analytics Chatbot. This AI assistant comprehends natural language queries, generates and executes SQL queries on their data warehouse, and delivers natural language summaries of retrieved media analytics data, enabling real-time insights for advertisers and media owners.

- Intelligent Search: Amazon Kendra can be used to create secure, generative AI-powered conversational experiences over enterprise content, providing intelligent search capabilities and an optimized Retriever API for RAG workflows. This can include searching through internal style guides to ensure brand-appropriate responses.

- Fact-Checking: While not a direct case study, the architecture for fake news detection using LLMs in Amazon Bedrock demonstrates the potential for RAG to enhance fact-checking processes by grounding statements in verifiable information.

Conclusion: RAG Adoption in News & Media

Custom RAG is redefining how news organizations meet the demands of speed, accuracy, and trust. By enabling LLMs to retrieve real-time, verifiable information, RAG addresses core challenges like hallucinations and outdated knowledge, making AI-generated content far more reliable. This shift is critical in an era where misinformation spreads quickly and public trust is fragile.

Beyond accuracy, RAG enhances newsroom efficiency by automating research, summarization, and initial content drafting. It empowers journalists to focus on deeper analysis and storytelling while supporting a continuous production model essential for today’s 24/7 news cycle.

Platforms like AWS and Azure provide the tools to scale custom RAG solutions—from data ingestion to LLM integration—offering flexibility, security, and cost-efficiency. With services like Amazon Bedrock and Azure OpenAI, media companies can create tailored, responsible AI systems that support editorial goals.

In short, custom RAG isn’t just an AI upgrade—it’s the future of credible, efficient, and resilient journalism. Learn more about our Generative AI Services if you’re looking for an RAG implementation partner.