Modern retail and ecommerce businesses operate in a complex environment defined by demand volatility, intricate supply chains, and the need to balance customer satisfaction with operational efficiency. Traditional forecasting methods, which often rely on manual statistical models, struggle to keep pace with these challenges, resulting in critical issues such as stockouts, over-provisioning, and inefficient resource allocation. A cloud-native forecasting architecture represents a fundamental shift from a reactive to a proactive operational model.

The adoption of a cloud-native approach directly addresses these pain points by connecting technical decisions to tangible business outcomes. For example, a retailer leveraging an Amazon Forecast-based solution for fresh produce was able to increase its forecasting accuracy from 27% to 76%, which in turn reduced wastage by 20% and improved gross margins. Beyond inventory, forecasting can optimize human resources; AffordableTours.com used a solution to anticipate call volumes, improving their missed call rate by approximately 20% and ensuring they had the right number of agents each day.

Architectural Paradigms: Choosing Your Path on AWS

The decision to build a cloud-native forecasting architecture involves a critical choice of strategic effort and control. The AWS ecosystem provides a spectrum of services, ranging from fully managed, low-effort solutions to high-control, intense-effort platforms. The ideal path depends on an organization’s internal machine learning (ML) expertise, budget, and strategic objectives.

A Low-Effort Managed Approach: Amazon Forecast

This architectural paradigm is designed for organizations that require rapid time-to-value with minimal internal ML expertise and a focus on core business outcomes. The solution is centered on Amazon Forecast, a fully managed service that leverages the same technology used by Amazon.com’s own retail operations.

Amazon Forecast automates the entire ML lifecycle. A user simply provides historical time-series data and any related variables that may influence the forecast—such as promotions or weather patterns—and the service handles the rest, including data preparation, algorithm selection, and model training. This automation removes the significant operational burden of managing complex infrastructure and algorithms, enabling business analysts and supply chain managers to self-serve without needing to wait for a specialized technical team. The speed of this approach is a major business advantage; for example, the Adore Beauty team was able to build a sales revenue forecasting prototype in just four days, which they subsequently extended to every brand they supported.

The service is not a simple, naive forecasting tool. It is capable of handling multivariate time series. It utilizes advanced deep learning algorithms, such as DeepAR+, which have been shown to outperform traditional statistical methods like exponential smoothing, especially for highly volatile items. A further capability is the generation of probabilistic forecasts at various quantiles (e.g., P10, P50, P90), which allows business leaders to make informed decisions by balancing the risks and costs of under-forecasting (stockouts) against over-forecasting (excess inventory and waste).

A More Fine-Grained High Control Approach: Amazon SageMaker

This architectural paradigm is suited for enterprises with dedicated ML engineering and data science teams that require maximum customization, complete control over their models, and the ability to innovate at the cutting edge of ML. This approach centers on Amazon SageMaker, a comprehensive, fully managed service that supports the entire ML lifecycle from end to end.

The cornerstone of this approach is the creation of end-to-end MLOps pipelines. Services like SageMaker Pipelines provide a framework to orchestrate multi-step ML workflows, ensuring they are reproducible, testable, and automated. A typical pipeline, defined using a directed acyclic graph (DAG), can include steps for data preprocessing, feature engineering, model training, hyperparameter tuning, model evaluation, and deployment to a production endpoint. The SageMaker Model Registry serves as a critical component, managing production-ready models by organizing versions, capturing metadata, and governing approval status.

This path provides the flexibility to implement any custom model, including advanced foundation models like Chronos, an LLM-based model for time series forecasting. Chronos is pre-trained on large datasets and can generalize forecasting capabilities across multiple domains, excelling at zero-shot forecasts without specific training on a new dataset. The MLOps framework is the true long-term value creator in this intense approach. The core value lies not in a single, well-trained model, but in a robust, automated, and repeatable framework that enables a large enterprise to continuously iterate, improve, and deploy new predictive capabilities.

A Hybrid Model: Blending Managed Services with Custom Workflows

A highly practical and common strategy for large enterprises is to implement a hybrid forecasting model. This involves utilizing fully managed services, such as Amazon Forecast, for recurring, lower-effort forecasting tasks, particularly for low-impact SKUs, and concurrently, leveraging Amazon SageMaker for high-value or high-impact SKUs, where greater control and customization are required.

This hybrid approach acknowledges that even with managed services, the most significant complexity often lies in the data engineering and orchestration layers. The highlights are that the fundamental data engineering and orchestration that feeds the model are the most critical parts of the entire forecasting system. A well-designed hybrid architecture enables an organization to leverage the speed and ease of managed services while maintaining necessary control over critical data workflows.

Total Cost of Ownership: The Financial Equation

A comprehensive understanding of TCO is crucial for making a strategic decision about a cloud-native forecasting solution. TCO encompasses not only the direct costs of AWS services but also the indirect costs associated with operations, staffing, and long-term maintenance.

The TCO of a cloud-native forecasting architecture includes several direct and indirect cost components:

- Compute: This is the primary cost driver and includes fees for model training and real-time inference. Training jobs, especially those using powerful GPU instances, can be expensive. The choice of instance type and the duration of training are key variables in this cost equation.

- Storage: The cost of storing raw data, processed data, and model artifacts in services like Amazon S3 and other data lake components.

- Networking: An often-overlooked cost driver, data egress fees can dominate an AWS bill and skew forecasts. Best practices include avoiding unnecessary cross-region traffic and using AWS VPC endpoints to keep traffic within the AWS network.

- Human Capital: A significant indirect cost. This includes the salaries of data scientists, ML engineers, and DevOps teams.

A strategic assessment of TCO must also consider the return on investment for human capital. A report analyzing the TCO of Amazon SageMaker concluded that its TCO over a three-year horizon is 54% lower compared to self-managed options like Amazon EC2 or Amazon EKS. This is primarily due to the automation of operational tasks, which can improve data scientists’ productivity by up to 10 times. The most valuable asset for a CTO is their talent. By using managed services that automate boilerplate tasks, a CTO can free up their most skilled people to focus on high-value, creative problem-solving. TCO, in this context, is not just about financial savings but about the long-term return on human productivity.

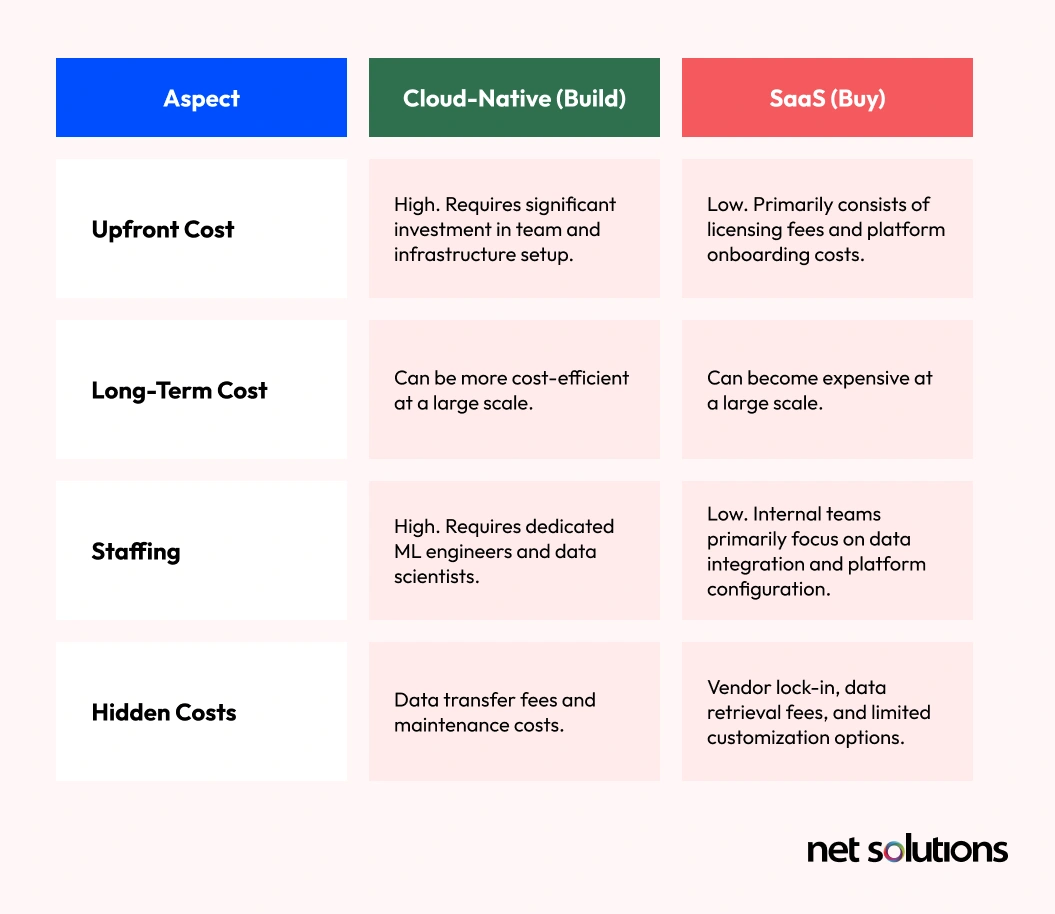

The Build vs. Buy Equation: Cloud-Native vs. SaaS

The traditional build-versus-buy debate has evolved. A cloud-native solution on AWS (build) offers a high-control, scalable platform. A third-party SaaS forecasting solution (buy) provides a low-effort, predictable operational expense. The following table provides a detailed TCO comparison for a CTO.

The analysis suggests that the modern build-versus-buy debate is not a simple calculation of a license fee versus AWS compute costs. It is a strategic choice between investing in a “fragmented AI stack” versus a “unified AI platform”.31 A custom “DIY stack” can have development costs ranging from $600,000 to over $1,500,000 for a single agent, not including recurring maintenance. For large enterprises with a long-term vision for ML, building a foundational, repeatable MLOps capability on a managed platform like SageMaker is often the more profitable long-term decision, as it provides strategic control, eliminates vendor lock-in, and frees up valuable internal talent.

Data Sovereignty and Governance: Securing the Digital Supply Chain

In the retail and eCommerce sectors of the UK and North America, the role of a legal and regulatory steward is very important. Data sovereignty and governance are not optional add-ons; they are non-negotiable requirements that must be baked into the architecture from the very beginning.

Two key concepts define the strategic landscape:

- Data Residency: The physical location where data is stored. This means having the ability to select and pin data to specific AWS Regions, such as London or the US East (N. Virginia) Region.

- Digital Sovereignty: A more comprehensive concept that ensures data and the infrastructure that processes it are subject to the legal and governance structures of the nation where they are collected. This includes ensuring operational autonomy and resilience, which is critical for compliance with regulations such as GDPR and the UK’s own data protection laws.

AWS provides a robust suite of services that allow a custom-built Forecasting Solution built on a comprehensive governance framework. The AWS suite of tools transforms governance from a manual process into an automated, technical framework. AWS Control Tower, for instance, provides rule-based policies, known as “Guardrails,” that can automatically block deployments that do not conform to data security rules. Similarly, AWS KMS automates the process of key management and encryption.

Anticipating and Mitigating Common Pitfalls

Even a well-designed architecture can fail if common pitfalls are not addressed proactively. The research identifies several key challenges that are often overlooked in the planning phase.

Data Quality and Integrity

The most significant risk to any forecasting system is poor data quality. The research highlights issues such as missing values, inconsistent data formats, outdated information, and duplicate records, which can disrupt trend analysis and lead to inaccurate predictions. The solution is to treat data quality as a continuous, operational challenge that can be solved with the right architecture and automation. Services like AWS Glue DataBrew provide a visual data preparation tool that allows data analysts to clean and normalize data without writing a single line of code, ensuring that the data is in the correct format for the forecasting service.

Organizational Silos and Misaligned Expectations

A common pitfall is the failure to recognize that effective forecasting is a cross-functional effort. The research warns that “effective cloud forecasting isn’t just a finance problem” and requires input from engineering, product, and operations teams. The solution is to adopt FinOps practices to give all teams shared visibility and context, allowing them to plan together.

The MLOps Challenge

For a custom architecture, the complexity of productionizing and maintaining ML models is a significant pitfall. The antidote is a robust, automated MLOps pipeline. This pipeline must include steps for continuous monitoring to detect model drift and an automated retraining mechanism to ensure the model remains accurate over time.

Conclusion: From Blueprint to Reality

The design of a cloud-native forecasting architecture on AWS is a strategic initiative for any business leader in the retail and ecommerce sectors. A comprehensive blueprint is required, from the high-level business case to the specific services and best practices. The optimal choice depends on an organization’s internal expertise, budget, and desired time-to-market. By embracing a well-architected, proactive approach, retail & e-commerce businesses not only predict future demand but also help their organization shape it, transforming their technical department into a core driver of business value.