Search experience is no longer just a utility; it’s a critical differentiator, a conversion engine, and a direct reflection of a brand’s commitment to customer satisfaction. Traditional keyword-based searches often fall short, struggling with nuance, context, and the inherent variability of human language. Enter Generative AI, poised to revolutionize how customers discover products, making search more intuitive, intelligent, and, ultimately, more effective.

This blog post unveils a plug-and-play AWS blueprint for building a Gen AI-powered eCommerce search solution that dramatically enhances user experience (UX). We’ll dive deep into the essential building blocks, covering both the sophisticated backend architecture that powers intelligent discovery and the intuitive frontend interfaces that redefine user interaction.

The Unseen Powerhouse: Backend Architecture for Intelligent Search

The magic of a Gen AI-powered search lies in its ability to understand user intent beyond mere keywords. This requires a robust backend that can process, store, and intelligently retrieve product information. Our blueprint focuses on three core components: RAG-enabled embedding, a high-performance vector database, and a sophisticated ranking search module. The search bar is the digital front door; building on AWS unlocks long‑term control and innovation.

The Foundation: RAG-Enabled Product Catalog Embedding

At the heart of our intelligent search solution is the ability to transform your entire eCommerce catalog – including images, product titles, and detailed descriptions – into a format that AI can understand and reason with. This is where Retrieval Augmented Generation (RAG) principles come into play. Instead of relying solely on a large language model (LLM) for generation, we “augment” its understanding by providing it with highly relevant, embedded context from your product data. Retrieval‑Augmented Generation grounds AI outputs by retrieving authoritative information and reducing hallucinations.

For each product in your catalog, we generate numerical representations, or “embeddings,” that capture its semantic meaning. This process involves:

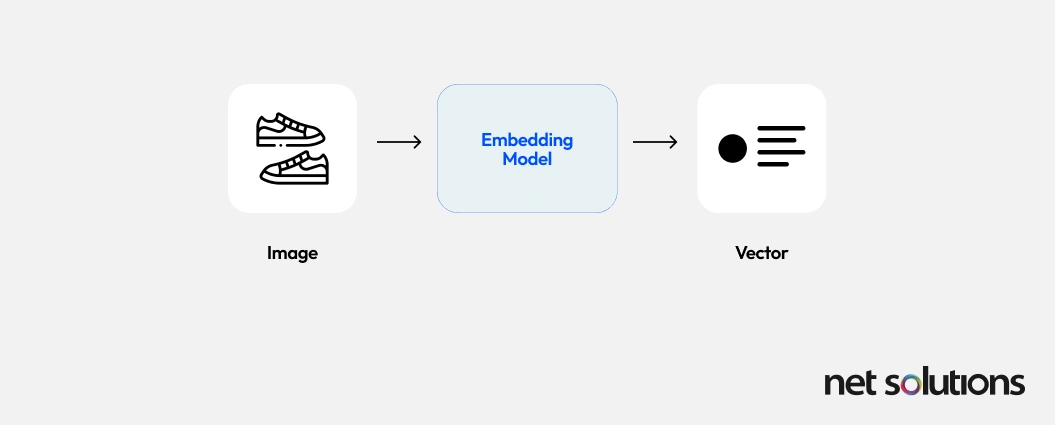

1. Image Embeddings

High-quality images are crucial in eCommerce. Using advanced computer vision models (e.g., those available via AWS Rekognition or integrated with Amazon SageMaker), we extract features from product images and convert them into vector embeddings. These embeddings allow the system to understand visual similarities and respond to image-based queries. Imagine a user uploading a photo of a dress they like, and the system instantly finding similar styles in your catalog.

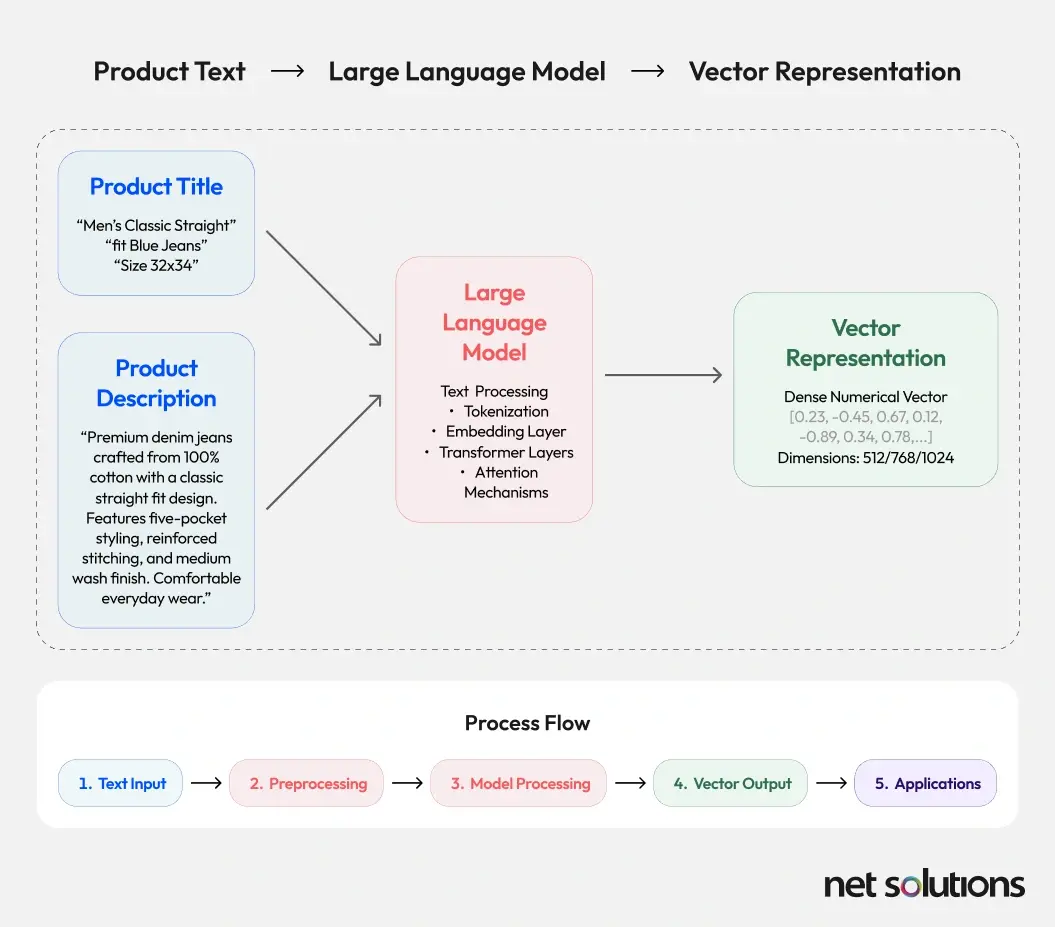

2. Product Tile and Description Embeddings

The product title and description are goldmines of textual information. We leverage powerful natural language processing (NLP) models (e.g., sentence transformers or models fine-tuned with Amazon Comprehend) to convert these textual attributes into dense vector embeddings. This process captures not just keywords, but the contextual meaning, synonyms, and relationships between words, enabling the system to understand complex queries like “comfortable running shoes for wide feet” even if those exact phrases aren’t in the product description.

By combining embeddings from images and text, we create a rich, multi-modal representation for every product. This comprehensive embedding allows for more accurate and contextually relevant search results.

The Memory Bank: Vector Database

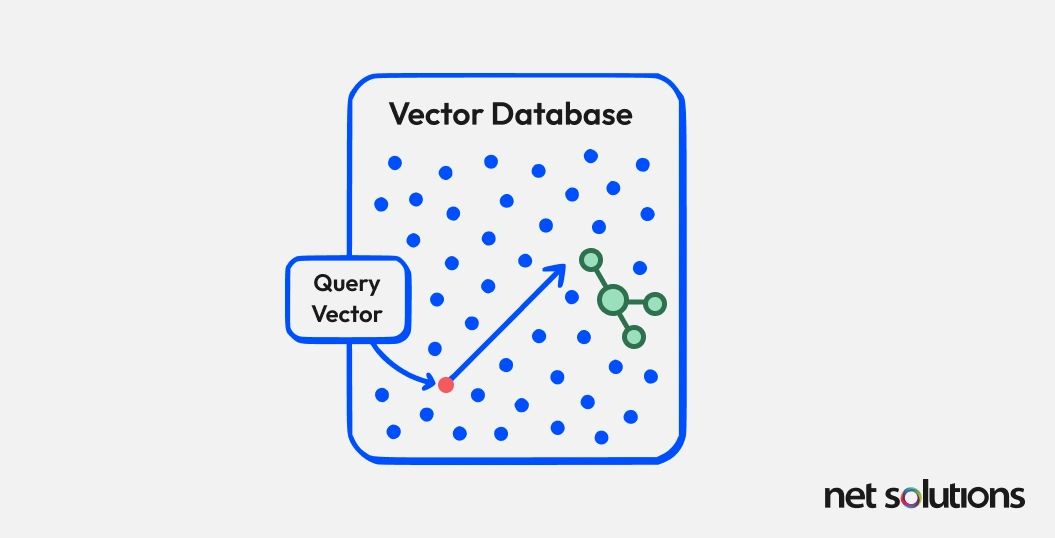

Once your entire product catalog is transformed into high-dimensional vector embeddings, you need an efficient way to store and retrieve them. This is the role of a vector database. Unlike traditional relational databases, which are optimized for storing and querying structured data, vector databases are purpose-built for storing, indexing, and querying vector embeddings based on their similarity.

AWS offers excellent options for this, such as Amazon OpenSearch Service with its k-NN (k-Nearest Neighbor) plugin or Amazon Aurora PostgreSQL with the pgvector extension. These databases allow for lightning-fast nearest neighbor searches, meaning when a user issues a query (which is also converted into an embedding), the system can quickly find the most similar product embeddings in your catalog. The closer the vectors are in the multi-dimensional space, the more relevant the product is to the query.

The Brain: Search and Ranking Module

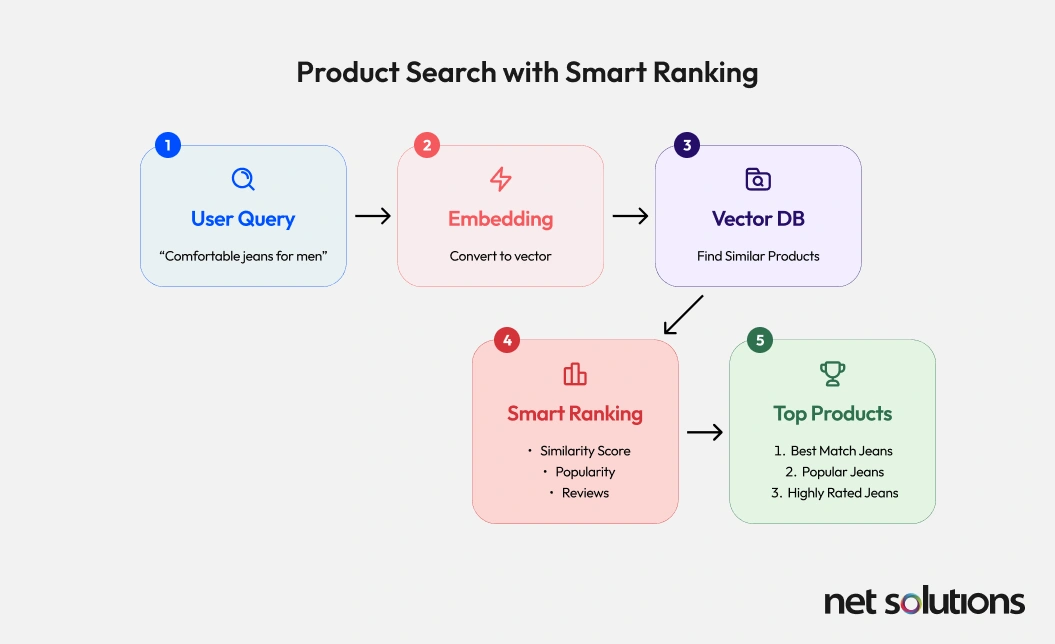

The search module acts as the “brain” of our backend, orchestrating the retrieval and refinement of results. It takes the user’s query, converts it into an embedding, queries the vector database for similar products, and then applies a sophisticated ranking algorithm to present the most relevant items.

- User Query Processing: When a user enters a query (text, audio, or image), it’s first processed. Text queries are embedded using the same NLP models as the product descriptions. Audio queries are transcribed into text using AWS Transcribe and then embedded. Image queries are processed through the image embedding model.

- Vector Similarity Search: The user query embedding is then used to perform a similarity search in the vector database. This retrieves an initial set of hundreds or thousands of potentially relevant product embeddings.

- The Ranking Solution: This is where accurate intelligence shines. Simply retrieving the “closest” vectors isn’t enough; we need to rank them to show the most commercially relevant items. This ranking solution is typically a machine learning model trained on various signals, including:

- Semantic Similarity Score: The core similarity returned by the vector database.

- Product Popularity/Sales Data: Products that sell well or are frequently viewed should get a boost.

- Customer Reviews/Ratings: Highly rated products are often preferred.

- Inventory Levels: Prioritizing in-stock items.

- Personalization Signals: User Browse history, past purchases, and expressed preferences.

- Business Rules: Specific promotions, new arrivals, or strategic product pushes.

By combining these signals, the ranking module ensures that the top 5 to 10 products presented to the user are not just semantically relevant but also optimized for conversion and business objectives. The multi-faceted approach transforms a simple search into a robust product discovery engine. Tools like Amazon Connect, Lex, and Kendra play a vital role in orchestrating these intelligent interactions and powering advanced customer support.

The Engaging Gateway: Frontend User Experience

While the backend does the heavy lifting, the frontend is where the user directly experiences the power of Gen AI. Our blueprint focuses on creating a seamless, natural, and highly interactive search interface that goes beyond traditional search bars.

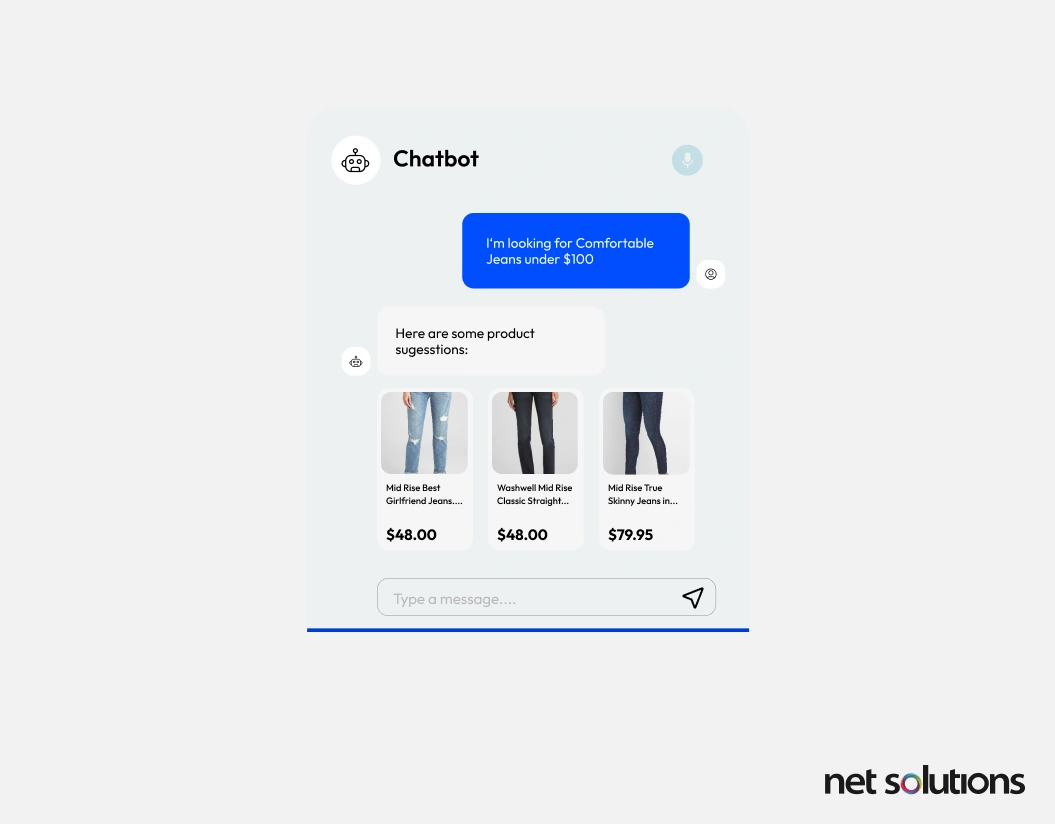

The Conversational Hub: Search Interface as a Chatbot

Moving away from the sterile search box, our solution proposes a search interface built as a conversational chatbot. This enables users to express their needs in natural language, just as they would when speaking with a sales assistant in a physical store.

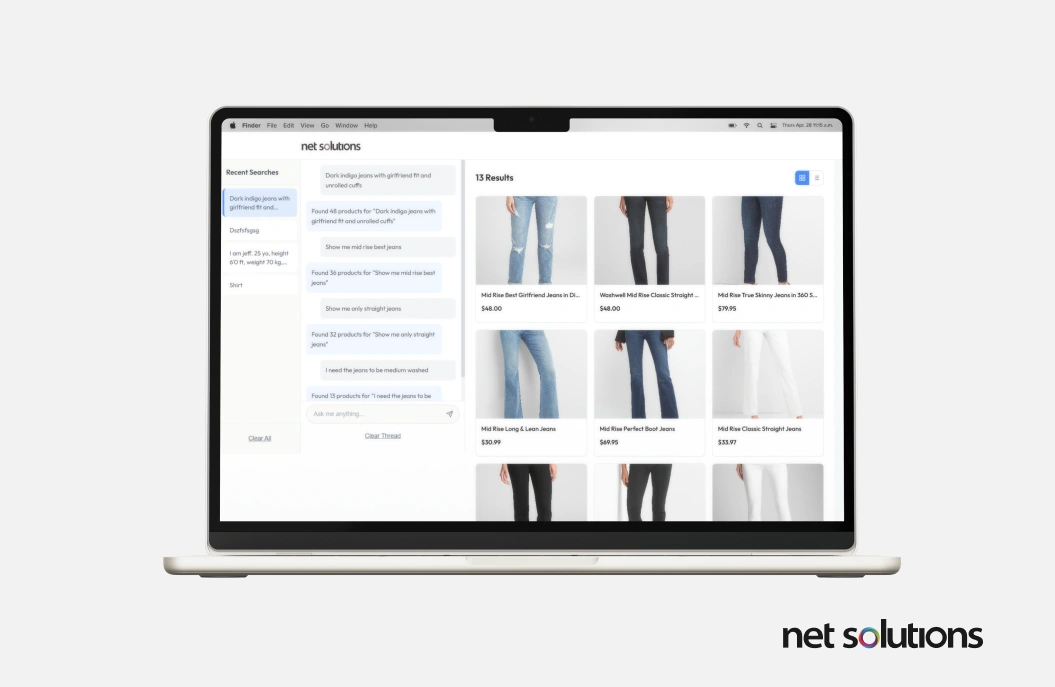

- Natural Language Interaction: Users can type questions like, “Show me a formal dress for a summer wedding,” or “I need comfortable sneakers for hiking.” The chatbot, powered by an LLM (e.g., Amazon Bedrock with models like Claude or Llama 2), understands the nuances, extracts entities (dress, summer, wedding, comfortable, sneakers, hiking), and constructs a precise query for the backend.

- Contextual Understanding: The chatbot can maintain conversational context, allowing follow-up questions like, “What about that in blue?” or “Are there any cheaper options?” This iterative refinement makes the search process highly intuitive and less frustrating.

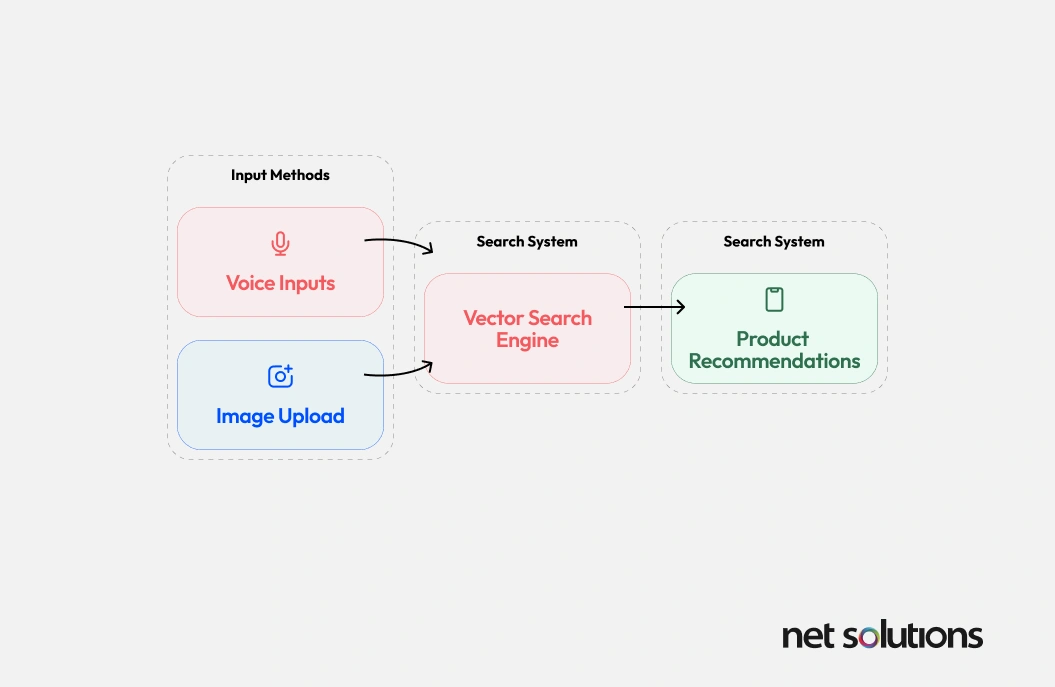

Beyond Text: Audio and Image Search

To truly accelerate UX, we incorporate multi-modal input capabilities, catering to diverse user preferences and scenarios.

- Audio Search: Leveraging AWS Transcribe, users can simply speak their search queries. This is particularly useful for mobile users or those with accessibility needs. The transcribed text is then fed into the backend’s embedding and ranking pipeline.

- Image Search: This is a game-changer. Users can upload an image (e.g., a photo of a specific watch, a piece of furniture, or an outfit), and the system will use the image embedding capabilities of the backend to find visually similar products in the catalog. This “visual search” capability eliminates the need for users to struggle with descriptive keywords, allowing them to simply show items.

Refined Discovery: Product Filtering

While the Gen AI-powered search aims for high accuracy, users often want to refine their results. The search interface includes robust filtering options that work seamlessly with the AI-driven results. After the initial AI-powered ranking, users can apply traditional filters based on:

- Category: (e.g., “Electronics,” “Apparel,” “Home Goods”)

- Price Range: (e.g., “$50 – $100”)

- Brand: (e.g., “Nike,” “Samsung”)

- Specific Attributes: (e.g., “Size: M,” “Color: Red,” “Material: Cotton”)

These filters interact with the AI-generated results, enabling users to drill down to their exact preferences without sacrificing the benefits of the initial intelligent ranking. This hybrid approach offers the best of both worlds: AI-driven discovery combined with user-controlled refinement.

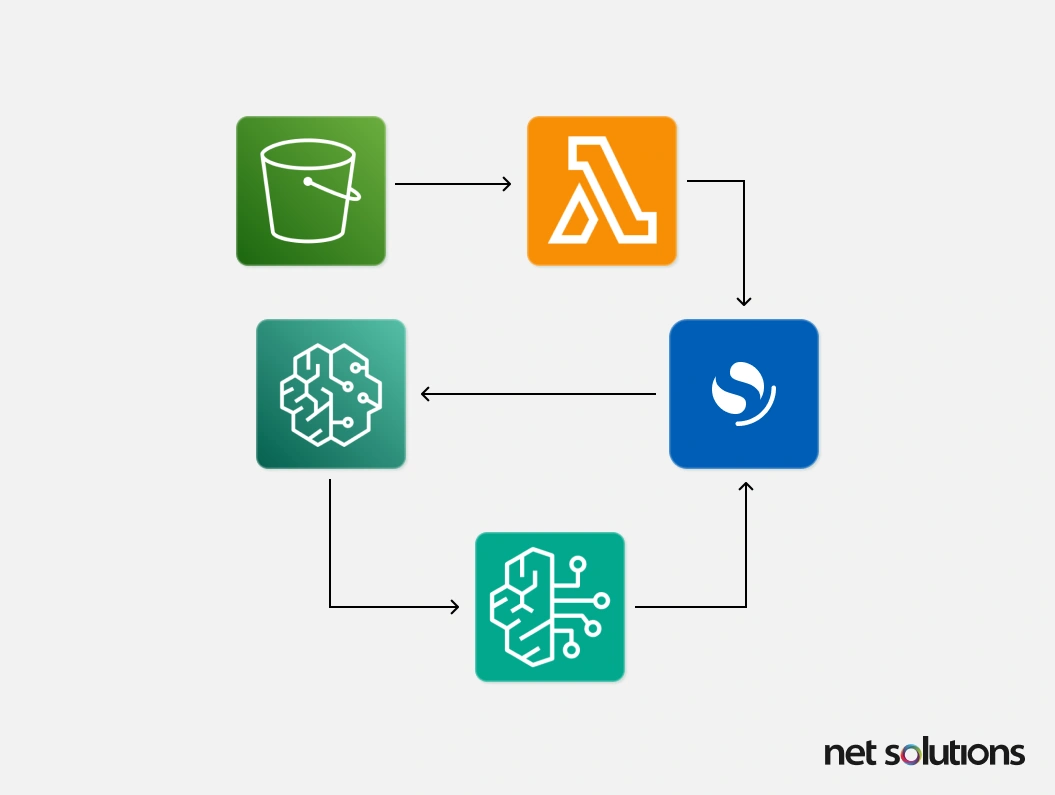

The AWS Advantage: Plug-and-Play Implementation

The beauty of this blueprint lies in its reliance on AWS’s comprehensive suite of services, enabling a truly plug-and-play approach.

- Data Ingestion & Processing: Utilizing AWS Lambda, AWS Glue, and Amazon S3 for efficient ingestion and preparation of product data.

- Embedding Models: Amazon SageMaker for hosting and deploying custom or pre-trained embedding models (for both images and text) or leveraging services like Amazon Rekognition and Amazon Comprehend.

- Vector Database: Amazon OpenSearch Service with k-NN or Amazon Aurora PostgreSQL with pgvector for scalable vector storage and retrieval.

- LLMs & Conversational AI: Amazon Bedrock for accessing foundational models for the chatbot, and AWS Lex for building conversational interfaces.

- Audio Processing: AWS Transcribe for speech-to-text conversion.

- Hosting & Deployment: Amazon EC2, AWS Fargate, and Amazon S3 for hosting the frontend application and backend services.

- Monitoring & Analytics: Amazon CloudWatch and AWS X-Ray for observing performance and user behavior, allowing for continuous optimization of the search experience and ranking models.

This integrated ecosystem significantly reduces development time and operational overhead, enabling eCommerce businesses to deploy and iterate on their Gen AI search solutions rapidly. Generative AI can also simplify platform migration by transforming content into a platform‑neutral format and reducing SEO risks.

Conclusion

A Gen AI‑powered search solution built on AWS isn’t just a technical upgrade; it’s a competitive strategy. By embedding RAG-enabled catalogs, leveraging vector databases, employing intelligent ranking, and delivering multimodal conversational experiences, retailers can transform their search bar into a discovery engine that delights customers and drives revenue.

At Net Solutions, we’ve built an AI-powered Product Search Accelerator, a pre-architected solution built on AWS, that “thinks like your customer,” offering intent-aware natural-language search, real-time adaptability to user behavior, and multimodal capabilities across text, image, and voice.

Under the hood, the accelerator combines AWS Bedrock, Amazon Personalize, and OpenSearch, enabling teams to launch faster on a ready-to-integrate architecture, deliver deeply personalized experiences, and scale effortlessly as the business grows.

Beyond technology, the accelerator drives real business impact, boosting engagement and conversions by serving products users want, enabling smarter cross-sell and upsell flows, and supporting dynamic customer segmentation. Clients can expect a faster time‑to‑value, going live in weeks rather than months, without heavy development cycles. Built on AWS, the solution inherits enterprise‑grade security and elastic scalability while understanding user intent beyond keywords. It comes backed by deep domain expertise in intelligent search, personalization, and AWS data services, coupled with a business-outcome-driven execution model.

If you’re curious about what an AI‑powered product search journey could look like for your brand and what kind of ai development services you can avail of, we can explore your current search experience and see how our accelerator can take it to the next level – no pitch, just possibilities!