When the Large Language Model (LLM) revolution first swept through the technology landscape, the conversation was dominated by sweeping predictions – the automation of all things, the end of traditional coding. As a technology services organization specializing in product engineering for mid-tier clients in eCommerce, retail, and sports media, we knew the hype needed to be grounded in the practical reality of continuous delivery.

We didn’t just observe the change; we integrated it. Over the period since we first began running pilots, we’ve developed a concrete Generative AI Playbook. This framework defines exactly where LLMs provide tangible, reliable value, and, crucially, where they absolutely do not. This isn’t theoretical work; this is the unvarnished truth derived from hundreds of production deployments and countless engineering hours.

We’ve moved past the novelty phase and are ready to share the lessons learned about how LLMs fundamentally augment our human product engineering capabilities, delivering faster, cleaner, and more cost-effective solutions for our partners.

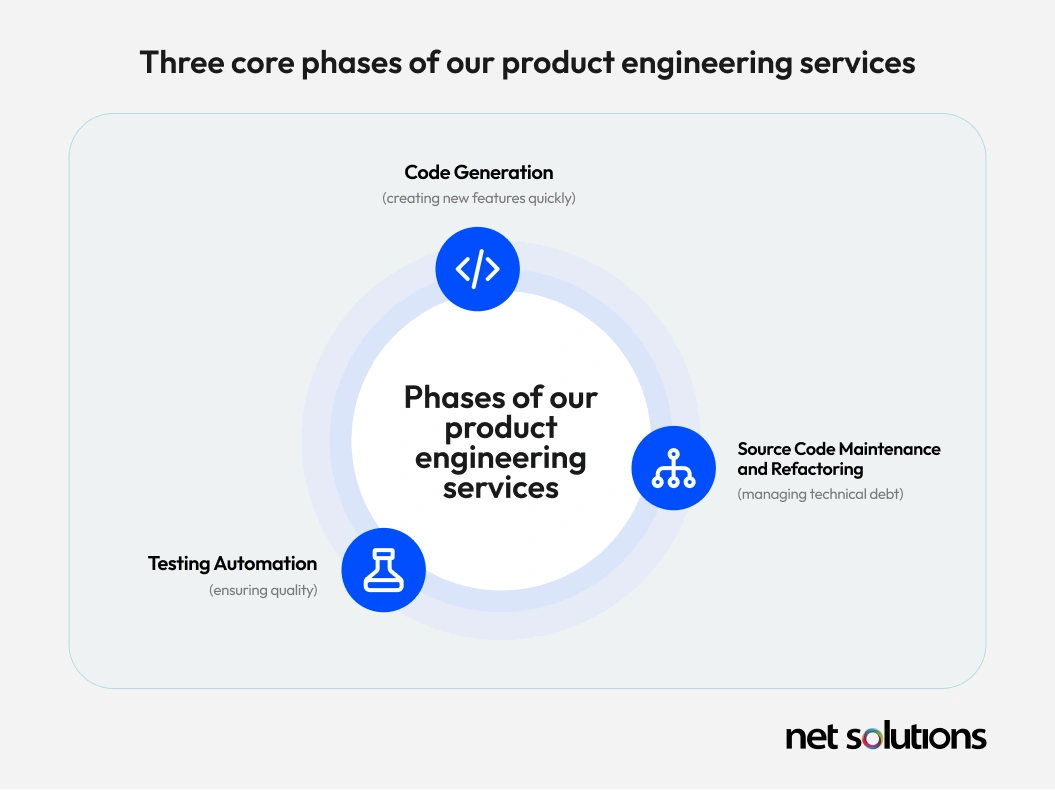

Phase 1: Accelerating Velocity of New Features with Generative Code

When we first started exploring this, code generation was the obvious starting point. It’s what everyone talked about, and frankly, it’s where the most immediate, measurable velocity boost exists. The model’s ability to predict the next logical line of code, or even the next logical block, felt transformative, particularly for highly repetitive tasks. But, as we quickly found out, the value is not evenly distributed across the engineering lifecycle.

Where Generative Code Shines – The Velocity Boost

We discovered that LLMs offer a spectacular return on investment when the task is localized, repeatable, and requires low-level context.

The biggest win, hands down, has been scaffolding and boilerplate generation. Think about launching a new feature for an eCommerce platform: you need a new database model, a corresponding API endpoint, data validation logic, serialization layers, and basic CRUD (Create, Read, Update, Delete) handlers. All of this is structurally predictable.

Our engineers used to spend hours on this preparatory work. Now, we feed the model the schema and the desired endpoint signature, and it generates the basic structure instantly. This isn’t just a time saver; it’s a cognitive multiplier, immediately freeing the human engineer to focus their energy on the complex business logic that truly differentiates the client’s product—be it a nuanced dynamic pricing algorithm for retail or a high-speed data stream aggregator for sports media.

Our operational data, gathered through various production environments, shows that the maximum performance uplift occurs when we leverage the LLM for generation at the individual function or method level. When the context provided to the model is constrained, e.g., “Write a function that safely validates a credit card number using the Luhn algorithm and returns a boolean”, the model’s output quality is almost flawless. The task is bounded, the inputs and outputs are clear, and the solution is well-represented in the training data. This focused, surgical application of code generation has been instrumental in shrinking our feature deployment windows.

The Architectural Chasm – Where LLMs Fall Short

If LLMs are phenomenal mechanics, they are decidedly not master architects.

What we quickly realized in our initial projects is that the LLM’s ability to understand syntax and generate local code does not translate into an ability to understand and design complex, large-scale systems. We experimented with pushing the boundaries, asking models to draft high-level design artifacts based on user requirements. This led us right into the Architectural Chasm.

Specifically, generating sophisticated design models, like UML diagrams for complex business class hierarchies or detailed Entity-Relationship Diagrams (ERDs) for normalized database schemas, consistently resulted in generic, abstract, or functionally incorrect outputs. We learned that the model, excellent as a tool for local execution, lacks the necessary capabilities for systemic reasoning at scale. This failure to perform at the architectural layer has been a critical lesson in setting realistic expectations for our clients.

Our View on Why Systemic Reasoning is Still a Human Domain

Why does a technology so brilliant at generating code for the Luhn algorithm stumble when asked to model an entire inventory system? Our technical assessment, corroborated by observations from across the developer community, boils down to a fundamental limitation: The Lack of an Internal System Model.

The architectural chasm exists because an LLM, by its nature, is a sequence prediction engine, not a system modeler.

- The Context Window Constraint: Complex architectural decisions rely on non-local dependencies, the flow of data across dozens of files, security boundaries, and scalability trade-offs. An LLM, restricted by its context window, simply cannot hold the entire, evolving software universe in its active memory. It can see the function it’s writing, but it cannot maintain the global state of the application’s architecture.

- Pattern Prediction vs. Strategic Intent: LLMs are trained on code patterns, not on the strategic, business-driven intent behind a project. Designing a class hierarchy requires understanding long-term maintenance, human organizational structure, and future scaling needs. This requires human judgment and domain-specific foresight, attributes that fall outside the model’s core of token prediction.

This observation led to a critical component of our playbook: The Human Imperative.

Generative AI can execute, but it cannot architect. Senior human architects remain the mandatory decision-makers for defining system architecture, complex data models, and high-level strategy. The LLM serves as a pair programmer for implementing the details of that architecture, but never for setting the blueprint itself. This firm guardrail ensures the solutions we deliver to our mid-tier clients are robust, scalable, and built for the long haul.

Phase 2: Mastering Large Codebases, Refactoring & Managing them

The second phase of the product lifecycle – maintenance, refactoring, and technical debt mitigation – is often the most costly. Here, we saw the promise of LLMs serving as “instant experts” on complex codebases. Again, the truth was nuanced, delivering both striking wins and profound limitations.

The Entity Success – Finding the Needles

When faced with a massive, unfamiliar, or poorly documented codebase, our LLM tools provide a phenomenal speed boost in syntactic identification. Our engineers no longer have to spend days manually tracing dependencies. We can prompt the model to “List all classes that handle payment processing logic in this 20,000-line repository,” and it will return the relevant file and class names almost instantly.

The LLM acts as an expert assistant capable of rapid, high-volume code summarization. It easily identifies method signatures, property declarations, and configuration variables. For a retail client trying to quickly locate the entry points for a specific discount calculation engine written five years ago, this capability accelerates the investigation phase from a multi-day slog into an hour-long task. It effectively transforms an intimidating codebase into a searchable, navigable document.

This ability to quickly map and summarize individual components is undeniably valuable. However, the true challenge of refactoring begins immediately after: establishing the relationships between those components.

The Architectural Chasm Deepens – Where the Playbook Stalls

Our most significant realization from the past year, and perhaps the most important caveat in our playbook, is this: LLMs struggle to establish accurate, non-local relationships between software entities.

We could easily ask the model, “What is the name of the Order Processing Class?” and get a correct answer. But when we asked, “Explain how the Order Processing Class is initialized and how it passes data to the Inventory Service via the message queue,” the model often failed. It might return a plausible-sounding, but fundamentally flawed, explanation of the relationship, occasionally even hallucinating dependencies that don’t exist.

This limitation is directly tied to the challenges we discussed in Phase 1:

- They are Token Predictors, Not Abstract Reasoners: LLMs are powerful pattern recognition systems trained primarily on syntax and common linguistic patterns (like next-token prediction). They excel at local tasks (like generating a function) where the context is provided, but they do not build or manipulate an internal, abstract representation of the entire program’s logic and architecture, unlike a compiler or a human architect.

The Human Imperative in Source Code Maintenance

In our current playbook, the LLM is treated as a sophisticated entity search engine and a local code summarizer. It is the human engineer who must still take the LLM’s entity findings, synthesize them with the knowledge of the system’s requirements, and perform the strategic work of refactoring. For a complex retail migration from a monolithic inventory system to a microservices architecture, the LLM can generate the new API definition for a service, but the human must define the boundary, the data contracts, and the overall migration strategy.

By limiting the LLM’s role to local, human-verified tasks, we ensure our refactoring efforts are safe, functional, and aligned with the long-term architectural strategy, guaranteeing safety and accuracy for our clients.

Phase 3: The Quality Multiplier on Testing Automation

If architectural analysis is the LLM’s weak point, then testing automation is where Generative AI provides the maximum, most immediate return on investment. This is where the models work with their inherent capabilities, rather than against them, making it a critical pillar of our playbook.

The Ideal Fit – Why Testing Aligns Perfectly with LLM Strengths

Conventional software engineering literature has long attested to the high success rate of LLMs in test generation, and our year in production confirms this emphatically. The reason is rooted in the structure of testing itself:

A vast majority of effective unit and integration tests are essentially a rig for generating mock objects for setup and for localized validation that Left Hand Side equals Right Hand Side.

- Repetitive Setup: Tests require generating extensive, but predictable, mock data (e.g., a mock user object, a dummy API response, a structured JSON payload). This is a highly repetitive task that LLMs excel at generating.

- Localized Reasoning: A unit test is intentionally scoped to check a single function or method. This localization perfectly fits within the context window limitations that defeated the LLM during architectural analysis. The LLM can easily see the function under test, its inputs, and its expected behavior without ingesting the entire multi-million-line codebase.

By treating the test suite as a massive library of high-quality, localized code snippets, we’ve found that the LLM’s strengths, pattern matching, synthesis, and rapid localized generation, are perfectly leveraged.

Implementation – From User Story to Test Case

We now integrate LLMs into our testing workflow from the moment a requirement is defined.

First, we input a User Story or a functional requirement (e.g., “As a shopper, I must receive an error if I try to use a gift card and a coupon code simultaneously”). The LLM then generates a comprehensive set of test cases—including unit tests, integration tests, and critical negative test cases—that cover the explicit requirement and all major edge scenarios. This process ensures we don’t miss obvious failure paths.

Second, the LLM is highly effective at suggesting and writing the mock objects required for isolation. For an engineer testing a new subscription service for a sports media client, the LLM can generate the necessary mocks for the payment gateway, the user authentication service, and the database repository, ensuring the test focuses solely on the subscription logic itself.

Third, we use LLMs to analyze code segments with low test coverage. We feed the model the function and ask it to generate the test code required to cover a specific uncovered path. This targeted approach has dramatically accelerated our ability to close coverage gaps, especially in older modules that were deployed before modern test standards were in place.

Our Generative AI Philosophy – The Balanced Advantage

Reflecting on the journey from our early experiments to our established playbook, one truth stands out: Generative AI is not a shortcut; it is a force multiplier for human intelligence.

Our experience over the last year has shown us exactly where the lines are drawn: LLMs are powerful, game-changing tools for localized creation and repetitive rigor (code generation and testing), but they remain unreliable for non-local, abstract reasoning (architecture and complex code relationship analysis).

Our philosophy is simple: The LLM is the Co-Pilot, but the human engineer always holds the controls, owns the output, and is solely responsible for the architectural integrity.

By adopting this realistic, tested, and balanced playbook, we ensure that the efficiencies gained translate directly into tangible value for our mid-tier clients. We deliver the efficiency of a Tier 1 solution provider, allowing our partners in eCommerce, retail, and sports media to move faster and build more robust, scalable products than ever before.

What area of our Gen AI Playbook interests you most: optimizing your testing workflow, or reviewing your current architectural best practices?